Update

Due to some valuable advice (courtesy of Tazman-audio), I’ve made a few small changes that ensure that synchronization stays independent of framerate. My original strategy for handling this issue was to grab the current sample of the audio source’s playback and compare that to the next expected beat’s sample value (discussed in more detail below). Although this was working fine, Unity’s documentation makes little mention as to the accuracy of this value, aside from it being more preferrable than using Time.time. Furthermore, the initial synch with the start of audio playback and the BeatCheck function would suffer from some, albeit very small, discrepancy.

Here is the change to the Start method in the “BeatSynchronizer” script that enforces synching with the start of the audio:

public float bpm = 120f; // Tempo in beats per minute of the audio clip.

public float startDelay = 1f; // Number of seconds to delay the start of audio playback.

public delegate void AudioStartAction(double syncTime);

public static event AudioStartAction OnAudioStart;

void Start ()

{

double initTime = AudioSettings.dspTime;

audio.PlayScheduled(initTime + startDelay);

if (OnAudioStart != null) {

OnAudioStart(initTime + startDelay);

}

}

The PlayScheduled method starts the audio clip’s playback at the absolute time (on the audio system’s dsp timeline) given in the function argument. The correct start time is then this initial value plus the given delay. This same value is then broadcast to all the beat counters that have subscribed to the AudioStartAction event, which ensures their alignment with the audio.

This necessitated a small change to the BeatCheck method as well, as can be seen below. The current sample is now calculated using the audio system’s dsp time instead of the clip’s sample position, which also aleviated the need for wrapping the current sample position when the audio clip loops.

IEnumerator BeatCheck ()

{

while (audioSource.isPlaying) {

currentSample = (float)AudioSettings.dspTime * audioSource.clip.frequency;

if (currentSample >= (nextBeatSample + sampleOffset)) {

foreach (GameObject obj in observers) {

obj.GetComponent<BeatObserver>().BeatNotify(beatType);

}

nextBeatSample += samplePeriod;

}

yield return new WaitForSeconds(loopTime / 1000f);

}

}

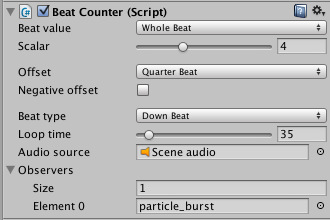

Lastly, I decided to add a nice feature to the beat synchronizer that allows you to scale up the the beat values by an integer constant. This is very useful for cases where you might want to synch to beats that transcend one measure. For example, you could synchronize to the downbeat of the second measure of a four-measure group by selecting the following values in the inspector:

Scaling up the beat values by a factor of 4 treats each beat as a measure instead of a single beat (assuming 4/4 time).

This same feature exists for the pattern counter as well, allowing great deal of flexibility and control over what you can synchronize to. There is a new example scene in the project demonstrating this.

Github project here.

I did, however, come across a possible bug in the PlayScheduled function: a short burst of noise can be heard occasionally when running a scene. I’ve encountered this both in the Unity editor (version 4.3.3) and in the build product. This does not happen when starting the audio using Play or checking “Play On Awake”.

Original Post

Lately I’ve been experimenting and brainstorming different ways in which audio can be tied in with gameplay, or even drive gameplay to some extent. This is quite challenging because audio/music is so abstract, but rhythm is one element that has been successfully incorporated into gameplay for some time. To experiment with this in Unity, I wrote a set of scripts that handle beat synchronization to an audio clip. The github project can be found here.

The way I set this up to work is by comparing the current sample of the audio data to the sample of the next expected beat to occur. Another approach would be to compare the time values, but this is less accurate and less flexible. Sample accuracy ensures that the game logic follows the actual audio data, and avoids the issues of framerate drops that can affect the time values.

The following script handles the synchronization of all the beat counters to the start of audio playback:

public float bpm = 120f; // Tempo in beats per minute of the audio clip.

public float startDelay = 1f; // Number of seconds to delay the start of audio playback.

public delegate void AudioStartAction(double syncTime);

public static event AudioStartAction OnAudioStart;

void Start ()

{

StartCoroutine(StartAudio());

}

IEnumerator StartAudio ()

{

yield return new WaitForSeconds(startDelay);

audio.Play();

if (OnAudioStart != null) {

OnAudioStart();

}

}

To accomplish this, each beat counter instance adds itself to the event OnAudioStart, seen here in the “BeatCounter” script:

void OnEnable ()

{

BeatSynchronizer.OnAudioStart += () => { StartCoroutine(BeatCheck()); };

}

When OnAudioStart is called above, all beat counters that have subscribed to this event are invoked, and in this case, starts the coroutine BeatCheck that contains most of the logic and processing of determining when beats occur. (The () => {} statement is C#’s lambda syntax).

The BeatCheck coroutine runs at a specific frequency given by loopTime, instead of running each frame in the game loop. For example, if a high degree of accuracy isn’t required, this can save on the CPU load by setting the coroutine to run every 40 or 50 milliseconds instead of the 10 – 15 milliseconds that it may take for each frame to execute in the game loop. However, since the coroutine yields to WaitForSeconds (see below), setting the loop time to 0 will effectively cause the coroutine to run as frequently as the game loop since execution of the coroutine in this case happens right after Unity’s Update method.

IEnumerator BeatCheck ()

{

while (audioSource.isPlaying) {

currentSample = audioSource.timeSamples;

// Reset next beat sample when audio clip wraps.

if (currentSample < previousSample) {

nextBeatSample = 0f;

}

if (currentSample >= (nextBeatSample + sampleOffset)) {

foreach (GameObject obj in observers) {

obj.GetComponent<BeatObserver>().BeatNotify(beatType);

}

nextBeatSample += samplePeriod;

}

previousSample = currentSample;

yield return new WaitForSeconds(loopTime / 1000f);

}

}

Furthermore, the fields that count the sample positions and next sample positions are declared as floats, which may seem wrong at first since there is no possibility of fractional samples. However, the sample period (the number of samples between each beat in the audio) is calculated from the BPM of the audio and the note value of the beat to check, so it is likely to result in a floating point value. In other words:

samplePeriod = (60 / (bpm * beatValue)) * sampleRate

where beatValue is a constant that defines the ratio of the beat to a quarter note. For instance, for an eighth beat, beatValue = 2 since there are two eighths in a quarter. For a dotted quarter beat, beatValue = 1 / 1.5; the ratio of one quarter to a dotted quarter.

If samplePeriod is truncated to an int, drift would occur due to loss of precision when comparing the sample values, especially for longer clips of music.

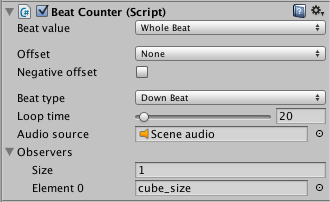

When it is determined that a beat has occurred in the audio, the script notifies its observers along with the type of beat that triggered the event (the beat type is a user-defined value that allows different action to be taken depending on the beat type). The observers (any Unity object) are easily added through the scripts inspector panel:

Each object observing a beat counter also contains a beat observer script that serves two functions: it allows control over the tolerance/sensitivity of the beat event, and sets the corresponding bit in a bit mask for what beat just occurred that the user can poll for in the object’s script and take appropriate action.

public void BeatNotify (BeatType beatType)

{

beatMask |= beatType;

StartCoroutine(WaitOnBeat(beatType));

}

IEnumerator WaitOnBeat (BeatType beatType)

{

yield return new WaitForSeconds(beatWindow / 1000f);

beatMask ^= beatType;

}

To illustrate how a game object might respond to and take action when a beat occurs, the following script activates an animation trigger on the down-beat and rotates the object during an up-beat by 45 degrees:

void Update ()

{

if ((beatObserver.beatMask & BeatType.DownBeat) == BeatType.DownBeat) {

anim.SetTrigger("DownBeatTrigger");

}

if ((beatObserver.beatMask & BeatType.UpBeat) == BeatType.UpBeat) {

transform.Rotate(Vector3.forward, 45f);

}

}

Finally, here is a short video demonstrating the example scene set up in the project:

Thank you so much for sharing this script! It was very helpful for me!

Thanks for sharing the scripts and project, this looks like just what I was looking for! Very nice work.

Trying to make a rhythm game and this is a beautiful article. Thank you so much.

Thanks for this wonderful tutorial.

One question I have is, I noticed there is a different delay getting users input across different Android devices. The game and the music plays very smoothly (it is not a lag)

I wanted to get input from the user in half beat (tap). Most of the devices I tried it worked as expected. Even in low end devices there is a lag on visuals but the input from the user get registered on half beat.

On some devices to get input at half beat I have to set the value at 0.7 etc.

I don’t think it related to device performance. Is there some other settings causing it?

Android devices seem to have widely varying (and generally not very good) audio latency. See this post for a comparison with iOS: http://superpowered.com/latency/#table

For programs like synthesizers or games that rely on responsive audio sync, latency around 10ms is ideal, whereas anything > 20ms would be considered unplayable by pro musicians.

I’m not familiar with Android at all, but since the latency is a direct result of the hardware, I suspect there is little you can do about it.

Thank you for your replay.

What you are saying is correct.

When I test this on iOS devices the delay is consistent. But on Android devices it is inconsistent. I noticed it is especially bad on Sony Xperia.

But I noticed by adjusting the shift we can compensate for this problem. For example if I set 0.7 instead of 0.5 for half beat it will work.

Is there a way to figure out this shift on the run?

I’m not familiar with Android devices at all having never programmed for one, but one of the main factors involved in latency is the buffer size. So if you can get that from the device you’re on, that’s at least part of it solved.

Thank you so much for this tutorial, it has helped me a lot!

No problem. Glad it helped!

Hello and first I want to thank you for this great post. So helpful! 🙂

And then I would like to ask some questions as a beginner of Unity, rhythm game maker and programmer. I have almost decided that I would like to make a rhythm game with Unity and the style is not that differernt from the classic rhythm game, but I am totally new to everything, so do you mind, maybe, point out several important units/function modules in Unity which may be used in making a rhythm game so I can check out on them and maybe try the things out?

Actually I have too many questions to ask, but I will try to keep myself silent. Just hope I can get some starter hints. A big thank you in advance.

Your question is a little too broad to give a specific answer, but in addition to the source code to this beat synchronizer, you can use Unity’s “PlayScheduled”, “SetScheduledStartTime”, “SetScheduledEndTime” methods to gain better control over when to play audio along the DSP timeline (i.e. align to tempo, etc.). These methods should always use the time returned by AudioSettings.dspTime as a reference.

Other than that, I can’t be any more specific without knowing more about what you are trying to do.

Hi Christian, amazing article. It has helped me a great deal. Do you have a work around for that burst of noise that occurs when using playScheduled? Thanks!

Jim

Great to hear it’s been helpful!

I did find a workaround for the “PlayScheduled” bug. Immediately after calling it, call “SetScheduledStartTime” with the same time argument. That seems to do it.

Hi Christian, great library. Like a few others, I am also making a rhythm game. Is there a way to dynamically sync the visuals to the music without knowing what the bpm and start delay are ahead of time? Thanks!

Hi Christian, great work on this. Like a few others, I am also working on a rhythm game. Is there a way to synchronize the visuals to the music dynamically without knowing what the bpm and start delay are ahead of time? Thanks!

Sorry if there’s repeat comments. My posts aren’t showing up. How do I sync everything to the music dynamically without knowing the start delay and bpm ahead of time? Is there a way to calculate it?

You can determine bpm dynamically of music using a beat finder algorithm (similar to what you would see in a DAW). But reliability will depend on the music itself, the audio quality, and the quality of the algorithm implementation.

I would imagine that a good start on writing a beat finder would be to use a combination of low-pass filtering and determining transients that you can measure the time between and thus the bpm. Just off the top of my head..

Hello! First of all, thank you so much for sharing your script. My team and I are trying to make a videogame that includes rhythm. I found your code and I am trying to understand how it works. How can I adapt either the scene or the code to make it work with another song?

Hi, christian you’re post has been great help with implementing my rhythm game, however i was wondering if you have any insights into how the BPM could dynamically change in tangent with the pitch of the audio.

Cool, great to hear.

In terms of your question, I’d probably need some more details of how the pitch of the audio is changing and whether you know this information ahead of time. i.e. if the pitch is being changed by some known value you could just do a mapping of pitch -> BPM, or map it to a curve.

If you need to dynamically determine pitch and BPM, however, that is a more complex problem that would require some filtering and determining transients in the audio that you could use to calculate the BPM based on how far apart the transients are.

Hey Christian! Very cool stuff here 🙂

I am trying to build a music system for a game where the music can change either on the next beat of the bar, at the end of the bar or instantly. Would that be achievable with your system and how?

Hope you have time to help.

Cheers

Thanks.

Yeah, that should be possible. It’s been quite some time since I wrote these scripts, so I can’t remember all the details. But you an create individual components, each with their own BeatCounter script synchronized to whatever you need. So in your case, the next beat (so set the BeatCounter to fire on every beat), end of the bar (set this BeatCounter to fire on the last beat of the bar), or instantly (requires no synchronization; just play straight away from your end). Then you would just have to handle the logic of which component/BeatCounter’s notification you use when the music needs to change. i.e. As the notifications come in from each component, you need to decide which one controls the music change based on your particular logic.

Cheers,

Christian

Hi Christian,

I’m currently testing out your project from GitHub and it seems that when I minimize Unity and then try to resume, the beat counters completely stop running the coroutine (BeatCheck) that checks if a beat has occurred. Are you having the same issue on your end?

Thanks,

Ty

Hi Ty,

No, I’m not experiencing this issue. I tried minimizing Unity and making sure the window lost focus, and when I went back to it, everything continued on fine.

I’m not totally up to date with Unity though — I’m on 5.3.4. And I’m on a Mac too. Not sure if that has anything to do with it..

Hey Christian,

Thanks for replying! I upgraded your project to 5.4 and I’m on Windows 10. It even has the same problem when I make a build of it, so it shouldn’t be just the editor itself. I’ll play around with it a little more to see what could be the issue.

Hi and thanks Christian, this works great still in 2018.

Old problem but I have solution for future readers.

Using Beat Synchronizer in 2017.3 and had the same problem. Problem is in BeatCheck coroutines while loop in Beatcounter script. OnApplicationPause(true) happens when editor/game is out of focus and audioSource.isPlaying turns false and while loop ends.

Just starting the BeatCheck coroutine again when out of pause works for my needs.

– Sam

Great work. Thanks!

I must photocopy this article immediately.

Pingback: Behold, An Update – Eric's Dev Blog