Having previously covered the general architecture and the graphics system, we come now to the audio part of the game engine. One might be surprised (or unsurprised depending on one’s expectations) that it is conceptually very similar to how the graphics work. As a quick recap of what was covered in the last part, the platform sends some memory to the game representing a bitmap that the game draws into for each frame, then takes that bitmap at the end of the frame and renders it onto the screen.

For my audio system, it works very much the same. The platform sends some memory representing an audio buffer to the game layer. Like the graphics system, the game layer does all the heavy lifting, handling the mixing of audio sources from music and sound effects into the buffer passed in by the platform layer. The platform layer then takes this audio buffer and sends it to the audio output provided by the operating system.

To do this, the platform layer needs to talk to Core Audio, which powers the audio for all of Apple’s platforms. Core Audio is a purely C-based API, and can be a little cumbersome to deal with at times (albeit very powerful). However, since the game layer handles all the mixing and all the platform layer is concerned with is one master audio buffer, calls to the Core Audio API are minimal.

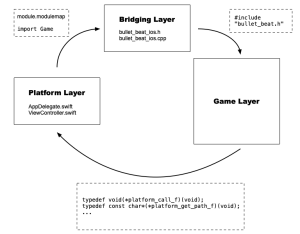

In order to encapsulate data around the audio buffer, the bridging layer declares a PlatformAudio struct (recall that the bridging layer is a .h/.cpp file pair that connects the game, written in C++, to the iOS platform layer written in Swift):

struct PlatformAudio {

double sampleRate;

uint32_t channels;

uint32_t bytesPerFrame;

void *samples;

};

Initialization of this struct and the audio system as a whole takes place in the didFinishLaunching method of the AppDelegate:

class AppDelegate: UIResponder, UIApplicationDelegate {

typealias AudioSample = Int16

var audioOutput: AudioComponentInstance?

var platformAudio: PlatformAudio!

func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey: Any]?) -> Bool {

// Platform initialization

if initAudio(sampleRate: 48000.0, channels: 2) {

ios_audio_initialize(&platformAudio)

AudioOutputUnitStart(audioOutput!)

}

ios_game_startup()

let screen = UIScreen.main

window = UIWindow(frame: screen.bounds)

window?.rootViewController = ViewController()

window?.makeKeyAndVisible()

return true

}

}

The AudioComponentInstance object represents an Audio Unit, which in Core Audio is required for working with audio at a low level, and provides the lowest latency for audio processing. After initializing the platform layer (seen back in the first part of this series), the audio system is first initialized on the OS side before initializing it in the game layer (via the bridging interface). Once that is done, the output unit is started — it will become clearer what this actually does very soon.

The audio interface in the bridging layer consists of three functions:

struct PlatformAudio* ios_create_platform_audio(double sampleRate, uint16_t channels, uint16_t bytesPerSample); void ios_audio_initialize(struct PlatformAudio *platformAudio); void ios_audio_deinitialize(struct PlatformAudio *platformAudio);

Before moving on to have a look at the initAudio method, here is the implementation for these functions (in the bridging .cpp file):

static bbAudioBuffer audioBuffer = {0};

struct PlatformAudio*

ios_create_platform_audio(double sampleRate, uint16_t channels, uint16_t bytesPerSample) {

static PlatformAudio platformAudio = {};

platformAudio.sampleRate = sampleRate;

platformAudio.channels = channels;

platformAudio.bytesPerFrame = bytesPerSample * channels;

return &platformAudio;

}

void

ios_audio_initialize(struct PlatformAudio *platformAudio) {

platform.audioSampleRate = platformAudio->sampleRate;

audioBuffer.sampleRate = platformAudio->sampleRate;

audioBuffer.channels = platformAudio->channels;

audioBuffer.samples = (int16_t *)calloc(audioBuffer.sampleRate, platformAudio->bytesPerFrame);

audioBuffer.mixBuffer = (float *)calloc(audioBuffer.sampleRate, sizeof(float) * platformAudio->channels);

platformAudio->samples = audioBuffer.samples;

}

void

ios_audio_deinitialize(struct PlatformAudio *platformAudio) {

free(audioBuffer.samples);

free(audioBuffer.mixBuffer);

}

Pretty straightforward, really. The ios_create_platform_audio function is called at the start of initAudio:

private func initAudio(sampleRate: Double, channels: UInt16) -> Bool {

let bytesPerSample = MemoryLayout<AudioSample>.size

if let ptr = ios_create_platform_audio(sampleRate, channels, UInt16(bytesPerSample)) {

platformAudio = ptr.pointee

} else {

return false

}

var streamDescription = AudioStreamBasicDescription(mSampleRate: sampleRate,

mFormatID: kAudioFormatLinearPCM,

mFormatFlags: kLinearPCMFormatFlagIsSignedInteger | kLinearPCMFormatFlagIsPacked,

mBytesPerPacket: platformAudio.bytesPerFrame,

mFramesPerPacket: 1,

mBytesPerFrame: platformAudio.bytesPerFrame,

mChannelsPerFrame: platformAudio.channels,

mBitsPerChannel: UInt32(bytesPerSample * 8),

mReserved: 0)

var desc = AudioComponentDescription()

desc.componentType = kAudioUnitType_Output

desc.componentSubType = kAudioUnitSubType_RemoteIO

desc.componentManufacturer = kAudioUnitManufacturer_Apple

desc.componentFlags = 0

desc.componentFlagsMask = 0

guard let defaultOutputComponent = AudioComponentFindNext(nil, &desc) else {

return false

}

var status = AudioComponentInstanceNew(defaultOutputComponent, &audioOutput)

if let audioOutput = audioOutput, status == noErr {

var input = AURenderCallbackStruct()

input.inputProc = ios_render_audio

withUnsafeMutableBytes(of: &platformAudio) { ptr in

input.inputProcRefCon = ptr.baseAddress

}

var dataSize = UInt32(MemoryLayout<AURenderCallbackStruct>.size)

status = AudioUnitSetProperty(audioOutput, kAudioUnitProperty_SetRenderCallback, kAudioUnitScope_Input, 0, &input, dataSize)

if status == noErr {

dataSize = UInt32(MemoryLayout<AudioStreamBasicDescription>.size)

status = AudioUnitSetProperty(audioOutput, kAudioUnitProperty_StreamFormat, kAudioUnitScope_Input, 0, &streamDescription, dataSize)

if status == noErr {

status = AudioUnitInitialize(audioOutput)

return status == noErr

}

}

}

return false

}

After creating the PlatformAudio instance, the method proceeds to setup the output Audio Unit on the Core Audio side. Core Audio needs to know what kind of audio it will be dealing with and how the data is laid out in memory in order to interpret it correctly, and this requires an AudioStreamBasicDescription instance that is eventually set as a property on the audio output unit.

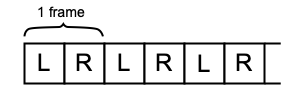

The first property is easy enough, just being the sample rate of the audio. For the mFormatID parameter, I pass in a flag specifying that the audio data will be uncompressed — just standard linear PCM. Next, I pass in some flags for the mFormatFlags parameter specifying that the audio samples will be packed signed 16 bit integers. Another flag that can be set here is one that specifies that the audio will be non-interleaved, meaning that all samples for each channel are grouped together, and each channel is laid out end-to-end. As I have omitted this flag, the audio is interleaved, meaning that the samples for each channel are interleaved in a single buffer as in the diagram below:

(As a quick side-note, although the final format of the audio is signed 16 bit integers, the game layer mixes in floating point. This is a common workflow in audio, to mix and process at a higher resolution and sampling rates than the final output.)

The rest of the fields in the stream description require a bit of calculation. Well, except for mFramesPerPacket, which is set to 1 for uncompressed audio; and since there is 1 frame per packet, mBytesPerPacket is the same as the number of bytes per frame. mChannelsPerFrame is just going to be the number of channels, and mBitsPerChannel is just going to be the size of an audio sample expressed as bits. The bytes per frame value, as seen above, is simply calculated from the bit depth of the audio (bytes per sample) and the number of channels.

Next, I need to get the output Audio Component. I need an Audio Unit in the Core Audio system that will send audio to the output hardware of the device. To find this component, an AudioComponentDescription is required and needs to be configured with parameters that return the desired unit (iOS contains a number of built-in units, from various I/O units to mixer and effect units). To find the audio output unit I need, I specify “output” for the type, “remote I/O” for sub type (the RemoteIO unit is the only one that connects to the audio hardware for I/O), and “Apple” as the manufacturer.

Once the component is found with a call to AudioComponentFindNext, I initialize the audio output unit with this component. This Audio Unit (and Core Audio in general) works on a “pull model” — you register a function with Core Audio who will then call you whenever it needs audio from you to fill its internal buffers. This function gets called on a high-priority thread, and runs at a faster rate than the game’s update function. Effectively this means you have less time to do audio processing per call than you do for simulating and rendering a frame, so the audio processing needs to be fast enough to keep up. Missing an audio update means the buffer that is eventually sent to the hardware is most likely empty, resulting in audio artifacts like clicks or pops because of the discontinuity between the audio in the previous buffer.

In order to set the callback function on the Audio Unit, I need an AURenderCallbackStruct instance that takes a pointer to the callback function and a context pointer. Once I have this, it is set as a property on the Audio Unit by calling AudioUnitSetProperty, and specifying “input” as the scope (this tells Core Audio that this property is for audio coming in to the unit). Next I take the stream description that was initialized earlier and set it as a property on the Audio Unit, also on the “input” scope (i.e. this tells the Audio Unit about the audio data coming in to it). Finally, the audio is initialized and is then ready for processing. The call we saw earlier to start the Audio Unit after initialization tells the OS to start calling this callback function to receive audio.

The callback function itself is actually quite simple:

fileprivate func ios_render_audio(inRefCon: UnsafeMutableRawPointer,

ioActionFlags: UnsafeMutablePointer<AudioUnitRenderActionFlag>,

inTimeStamp: UnsafePointer<AudioTimeStamp>,

inBusNumber: UInt32,

inNumberFrames: UInt32,

ioData: UnsafeMutablePointer<AudioBufferList>?) -> OSStatus

{

var platformAudio = inRefCon.assumingMemoryBound(to: PlatformAudio.self).pointee

ios_process_audio(&platformAudio, inNumberFrames)

let buffer = ioData?.pointee.mBuffers.mData

buffer?.copyMemory(from: platformAudio.samples, byteCount: Int(platformAudio.bytesPerFrame * inNumberFrames))

return noErr

}

Similar to the graphics system, here is where the call is made to the bridging layer to process (i.e. fill) the audio buffer with data from the game. Core Audio calls this function with the number of frames it needs as well as the buffer(s) to place the data in. Once the game layer is done processing the audio, the data is copied into the buffer provided by the OS. The ios_process_audio function simply forwards the call to the game layer after specifying how many frames of audio the system requires:

void

ios_process_audio(struct PlatformAudio *platformAudio, uint32_t frameCount) {

audioBuffer.frameCount = frameCount;

process_audio(&audioBuffer, &gameMemory, &platform);

}

The last part to cover in the audio system of my custom game engine is how to handle audio with regard to the lifecycle of the application. We saw how audio is initialized in the didFinishLaunching method of the AppDelegate, so naturally the audio is shut down in the applicationWillTerminate method:

func applicationWillTerminate(_ application: UIApplication) {

if let audioOutput = audioOutput {

AudioOutputUnitStop(audioOutput)

AudioUnitUninitialize(audioOutput)

AudioComponentInstanceDispose(audioOutput)

}

ios_game_shutdown()

ios_audio_deinitialize(&platformAudio)

ios_platform_shutdown()

}

When the user hits the Home button and sends the game into the background, audio processing needs to stop, and when the game is brought back to the foreground again, it needs to resume playing. Stopping the Audio Unit will halt the callback function that processes audio from the game, and starting it will cause Core Audio to resume calling the function as needed.

func applicationWillEnterForeground(_ application: UIApplication) {

if let audioOutput = audioOutput {

AudioOutputUnitStart(audioOutput)

}

if let vc = window?.rootViewController as? ViewController {

vc.startGame()

}

}

func applicationDidEnterBackground(_ application: UIApplication) {

if let audioOutput = audioOutput {

AudioOutputUnitStop(audioOutput)

}

if let vc = window?.rootViewController as? ViewController {

vc.stopGame()

}

}

This completes my detailed overview of the three critical pieces of any game engine: the platform, the graphics, and the audio. And as I did with the blog post on the graphics system, here is a short demo of the audio system running in the game engine: