In part 1 of this series of posts, I went over creating an envelope detector that detects both peak amplitude and RMS values. In part 2, I used it to create a compressor/limiter. There were two common features missing from that compressor plug-in, however, that I will go over in this final part: soft knee and lookahead. Also, as I have stated in the previous parts, this effect is being created with Unity in mind, but the theory and code is easily adaptable to other uses.

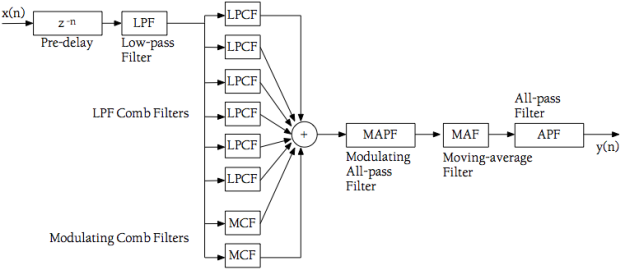

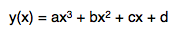

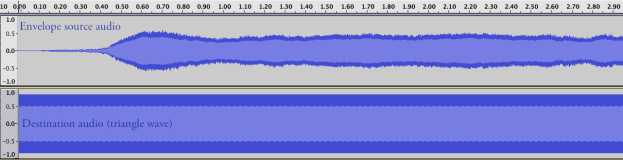

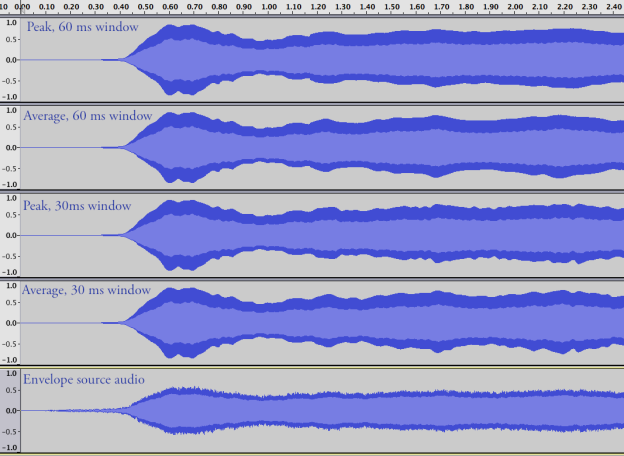

Let’s start with lookahead since it is very straightforward to implement. Lookahead is common in limiters and compressors because any non-zero attack/release times will cause the envelope to lag behind the audio due to the filtering, and as a result, it won’t attenuate the right part of the signal corresponding to the envelope. This can be fixed by delaying the output of the audio so that it lines up with the signal’s envelope. The amount we delay the audio by is the lookahead time, so an extra field is needed in the compressor:

public class Compressor : MonoBehaviour

{

[AudioSlider("Threshold (dB)", -60f, 0f)]

public float threshold = 0f; // in dB

[AudioSlider("Ratio (x:1)", 1f, 20f)]

public float ratio = 1f;

[AudioSlider("Knee", 0f, 1f)]

public float knee = 0.2f;

[AudioSlider("Pre-gain (dB)", -12f, 24f)]

public float preGain = 0f; // in dB, amplifies the audio signal prior to envelope detection.

[AudioSlider("Post-gain (dB)", -12f, 24f)]

public float postGain = 0f; // in dB, amplifies the audio signal after compression.

[AudioSlider("Attack time (ms)", 0f, 200f)]

public float attackTime = 10f; // in ms

[AudioSlider("Release time (ms)", 10f, 3000f)]

public float releaseTime = 50f; // in ms

[AudioSlider("Lookahead time (ms)", 0, 200f)]

public float lookaheadTime = 0f; // in ms

public ProcessType processType = ProcessType.Compressor;

public DetectionMode detectMode = DetectionMode.Peak;

private EnvelopeDetector[] m_EnvelopeDetector;

private Delay m_LookaheadDelay;

private delegate float SlopeCalculation (float ratio);

private SlopeCalculation m_SlopeFunc;

// Continued...

I won’t actually go over implementing the delay itself since it is very straightforward (it’s just a simple circular buffer delay line). The one thing I will say is that if you want the lookahead time to be modifiable in real time, the circular buffer needs to be initialized to a maximum length allowed by the lookahead time (in my case 200ms), and then you need to keep track of the actual time/position in the buffer that will move based on the current delay time.

The delay comes after the envelope is extracted from the audio signal and before the compressor gain is applied:

void OnAudioFilterRead (float[] data, int numChannels)

{

// Calculate pre-gain & extract envelope

// ...

// Delay the incoming signal for lookahead.

if (lookaheadTime > 0f) {

m_LookaheadDelay.SetDelayTime(lookaheadTime, sampleRate);

m_LookaheadDelay.Process(data, numChannels);

}

// Apply compressor gain

// ...

}

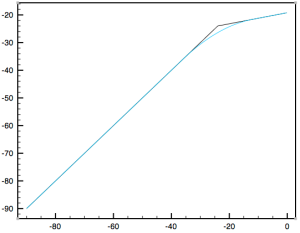

That’s all there is to the lookahead, so now we turn our attention to the last feature. The knee of the compressor is the area around the threshold where compression kicks in. This can either be a hard knee (the compressor kicks in abruptly as soon as the threshold is reached) or a soft knee (compression is more gradual around the threshold, known as the knee width). Comparing the two in a plot illustrates the difference clearly.

There are two common ways of specifying the knee width. One is an absolute value in dB, and the other is as a factor of the threshold as a value between 0 and 1. The latter is one that I’ve found to be most common, so it will be what I use. In the diagram above, for example, the threshold is -24 dB, so a knee value of 1.0 results in a knee width of 24 dB around the threshold. Like the lookahead feature, a new field will be required:

public class Compressor : MonoBehaviour

{

[AudioSlider("Threshold (dB)", -60f, 0f)]

public float threshold = 0f; // in dB

[AudioSlider("Ratio (x:1)", 1f, 20f)]

public float ratio = 1f;

[AudioSlider("Knee", 0f, 1f)]

public float knee = 0.2f;

[AudioSlider("Pre-gain (dB)", -12f, 24f)]

public float preGain = 0f; // in dB, amplifies the audio signal prior to envelope detection.

[AudioSlider("Post-gain (dB)", -12f, 24f)]

public float postGain = 0f; // in dB, amplifies the audio signal after compression.

[AudioSlider("Attack time (ms)", 0f, 200f)]

public float attackTime = 10f; // in ms

[AudioSlider("Release time (ms)", 10f, 3000f)]

public float releaseTime = 50f; // in ms

[AudioSlider("Lookahead time (ms)", 0, 200f)]

public float lookaheadTime = 0f; // in ms

public ProcessType processType = ProcessType.Compressor;

public DetectionMode detectMode = DetectionMode.Peak;

private EnvelopeDetector[] m_EnvelopeDetector;

private Delay m_LookaheadDelay;

private delegate float SlopeCalculation (float ratio);

private SlopeCalculation m_SlopeFunc;

// Continued...

At the start of our process block (OnAudioFilterRead()), we set up for a possible soft knee compression:

float kneeWidth = threshold * knee * -1f; // Threshold is in dB and will always be either 0 or negative, so * by -1 to make positive. float lowerKneeBound = threshold - (kneeWidth / 2f); float upperKneeBound = threshold + (kneeWidth / 2f);

Still in the processing block, we calculate the compressor slope as normal according to the equation from part 2:

slope = 1 – (1 / ratio), for compression

slope = 1, for limiting

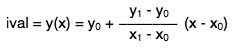

To calculate the actual soft knee, I will use linear interpolation. First I check if the knee width is > 0 for a soft knee. If it is, the slope value is scaled by the linear interpolation factor if the envelope value is within the knee bounds:

slope *= ((envValue – lowerKneeBound) / kneeWidth) * 0.5

The compressor gain is then determined using the same equation as before, except instead of calculating in relation to the threshold, we use the lower knee bound:

gain = slope * (lowerKneeBound – envValue)

The rest of the calculation is the same:

for (int i = 0, j = 0; i < data.Length; i+=numChannels, ++j) {

envValue = AudioUtil.Amp2dB(envelopeData[0][j]);

slope = m_SlopeFunc(ratio);

if (kneeWidth > 0f && envValue > lowerKneeBound && envValue < upperKneeBound) { // Soft knee

// Lerp the compressor slope value.

// Slope is multiplied by 0.5 since the gain is calculated in relation to the lower knee bound for soft knee.

// Otherwise, the interpolation's peak will be reached at the threshold instead of at the upper knee bound.

slope *= ( ((envValue - lowerKneeBound) / kneeWidth) * 0.5f );

gain = slope * (lowerKneeBound - envValue);

} else { // Hard knee

gain = slope * (threshold - envValue);

gain = Mathf.Min(0f, gain);

}

gain = AudioUtil.dB2Amp(gain);

for (int chan = 0; chan < numChannels; ++chan) {

data[i+chan] *= (gain * postGainAmp);

}

}

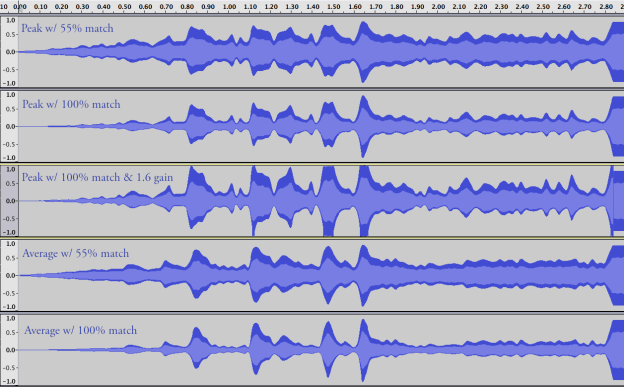

In order to verify that the soft knee is calculated correctly, it is best to plot the results. To do this I just created a helper method that calculates the compressor values for a range of input values from -90 dB to 0 dB. Here is the plot of a compressor with a threshold of -12.5 dB, a 4:1 ratio, and a knee of 0.4:

Of course this also works when the compressor is in limiter mode, which will result in a gentler application of the limiting effect.

That concludes this series on building a compressor/limiter component.