Lately I’ve been busy developing an audio-focused game in Unity, whose built-in audio engine is notorious for being extremely basic and lacking in features. (As of this writing, Unity 5 has not yet been released, in which its entire built-in audio engine is being overhauled). For this project I have created all the DSP effects myself as script components, whose behavior is driven by Unity’s coroutines. In order to have slightly more control over the final mix of these elements, it became clear that I needed a compressor/limiter. This particular post is written with Unity/C# in mind, but the theory and code is easy enough to adapt to other uses. In this first part we’ll be looking at writing the envelope detector, which is needed by the compressor to do its job.

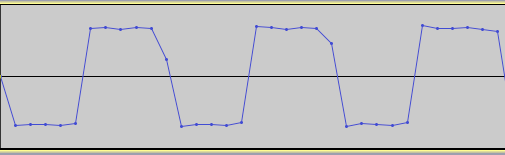

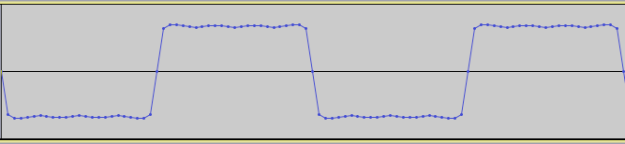

An envelope detector (also called a follower) extracts the amplitude envelope from an audio signal based on three parameters: an attack time, release time, and detection mode. The attack/release times are fairly straightforward, simply defining how quickly the detection responds to rising and falling amplitudes. There are typically two modes of calculating the envelope of a signal: by its peak value or its root mean square value. A signal’s peak value is just the instantaneous sample value while the root mean square is measured over a series of samples, and gives a more accurate account of the signal’s power. The root mean square is calculated as:

rms = sqrt ( (1/n) * (x12 + x22 + … + xn2) ),

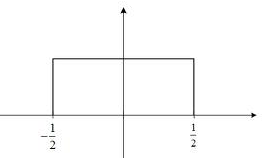

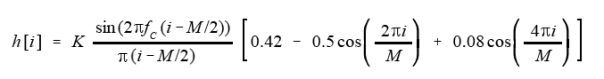

where n is the number of data values. In other words, we sum together the squares of all the sample values in the buffer, find the average by dividing by n, and then taking the square root. In audio processing, however, we normally bound the sample size (n) to some fixed number (called windowing). This effectively means that we calculate the RMS value over the past n samples.

(As an aside, multiplying by 1/n effectively assigns equal weights to all the terms, making it a rectangular window. Other window equations can be used instead which would favor terms in the middle of the window. This results in even greater accuracy of the RMS value since brand new samples (or old ones at the end of the window) have less influence over the signal’s power.)

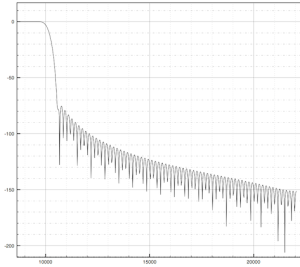

Now that we’ve seen the two modes of detecting a signal’s envelope, we can move on to look at the role of the attack/release times. These values are used in calculating coefficients for a first-order recursive filter (also called a leaky integrator) that processes the values we get from the audio buffer (through one of the two detection methods). Simply stated, we get the sample values from the audio signal and pass them through a low-pass filter to smooth out the envelope.

We calculate the coefficients using the time-constant equation:

g = e ^ ( -1 / (time * sample rate) ),

where time is in seconds, and sample rate in Hz. Once we have our gain coefficients for attack/release, we put them into our leaky integrator equation:

out = in + g * (out – in),

where in is the input sample we detected from the incoming audio, g is either the attack or release gain, and out is the envelope sample value. Here it is in code:

public void GetEnvelope (float[] audioData, out float[] envelope)

{

envelope = new float[audioData.Length];

m_Detector.Buffer = audioData;

for (int i = 0; i < audioData.Length; ++i) {

float envIn = m_Detector[i];

if (m_EnvelopeSample < envIn) {

m_EnvelopeSample = envIn + m_AttackGain * (m_EnvelopeSample - envIn);

} else {

m_EnvelopeSample = envIn + m_ReleaseGain * (m_EnvelopeSample - envIn);

}

envelope[i] = m_EnvelopeSample;

}

}

(Source: code is based on “Envelope detector” from http://www.musicdsp.org/archive.php?classid=2#97, with detection modes added by me.)

The envelope sample is calculated based on whether the current audio sample is rising or falling, with the envIn sample resulting from one of the two detection modes. This is implemented similarly to what is known as a functor in C++. I prefer this method to having another branching structure inside the loop because among other things, it’s more extensible and results in cleaner code (as well as being modular). It could be implemented using delegates/function pointers, but the advantage of a functor is that it retains its own state, which is useful for the RMS calculation as we will see. Here is how the interface and classes are declared for the detection modes:

public interface IEnvelopeDetection

{

float[] Buffer { set; get; }

float this [int index] { get; }

void Reset ();

}

We then have two classes that implement this interface, one for each mode:

A signal’s peak value is the instantaneous sample value while the root mean square is measured over a series of samples, and gives a more accurate account of the signal’s power.

public class DetectPeak : IEnvelopeDetection

{

private float[] m_Buffer;

/// <summary>

/// Sets the buffer to extract envelope data from. The original buffer data is held by reference (not copied).

/// </summary>

public float[] Buffer

{

set { m_Buffer = value; }

get { return m_Buffer; }

}

/// <summary>

/// Returns the envelope data at the specified position in the buffer.

/// </summary>

public float this [int index]

{

get { return Mathf.Abs(m_Buffer[index]); }

}

public DetectPeak () {}

public void Reset () {}

}

This particular class involves a rather trivial operation of just returning the absolute value of a signal’s sample. The RMS detection class is more involved.

/// <summary>

/// Calculates and returns the root mean square value of the buffer. A circular buffer is used to simplify the calculation, which avoids

/// the need to sum up all the terms in the window each time.

/// </summary>

public float this [int index]

{

get {

float sampleSquared = m_Buffer[index] * m_Buffer[index];

float total = 0f;

float rmsValue;

if (m_Iter < m_RmsWindow.Length-1) {

total = m_LastTotal + sampleSquared;

rmsValue = Mathf.Sqrt((1f / (index+1)) * total);

} else {

total = m_LastTotal + sampleSquared - m_RmsWindow.Read();

rmsValue = Mathf.Sqrt((1f / m_RmsWindow.Length) * total);

}

m_RmsWindow.Write(sampleSquared);

m_LastTotal = total;

m_Iter++;

return rmsValue;

}

}

public DetectRms ()

{

m_Iter = 0;

m_LastTotal = 0f;

// Set a window length to an arbitrary 128 for now.

m_RmsWindow = new RingBuffer<float>(128);

}

public void Reset ()

{

m_Iter = 0;

m_LastTotal = 0f;

m_RmsWindow.Clear(0f);

}

The RMS calculation in this class is an optimization of the general equation I stated earlier. Instead of continually summing together all the values in the window for each new sample, a ring buffer is used to save each new term. Since there is only ever 1 new term to include in the calculation, it can be simplified by storing all the squared sample values in the ring buffer and using it to subtract from our previous total. We are just left with a multiply and square root, instead of having to redundantly add together 128 terms (or however big n is). An iterator variable ensures that the state of the detector remains consistent across successive audio blocks.

In the envelope detector class, the detection mode is selected by assigning the corresponding class to the ivar:

public class EnvelopeDetector

{

protected float m_AttackTime;

protected float m_ReleaseTime;

protected float m_AttackGain;

protected float m_ReleaseGain;

protected float m_SampleRate;

protected float m_EnvelopeSample;

protected DetectionMode m_DetectMode;

protected IEnvelopeDetection m_Detector;

// Continued...

public DetectionMode DetectMode

{

get { return m_DetectMode; }

set {

switch(m_DetectMode) {

case DetectionMode.Peak:

m_Detector = new DetectPeak();

break;

case DetectionMode.Rms:

m_Detector = new DetectRms();

break;

}

}

}

Now that we’ve looked at extracting the envelope from an audio signal, we will look at using it to create a compressor/limiter component to be used in Unity. That will be upcoming in part 2.